The Self-Driving Automaton

The Self-Driving Automaton

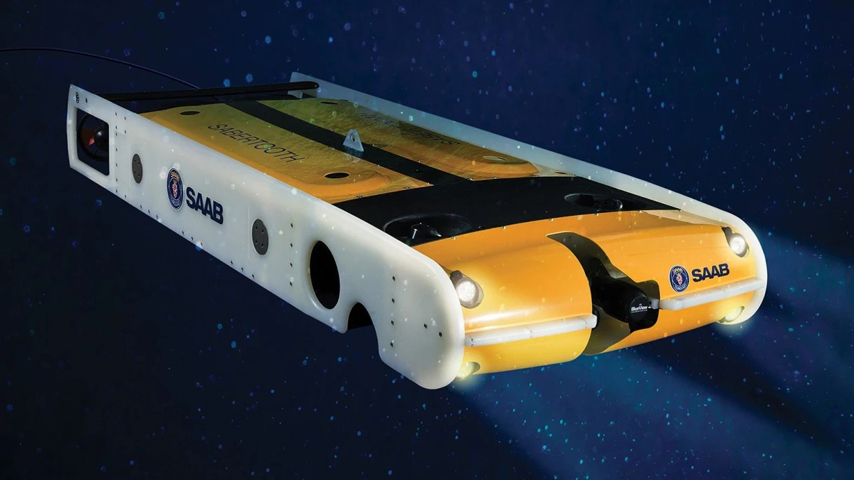

A Jackrabbot robot wearing a Stanford tie and sun-hat. Bot image: Stanford

The autonomous car is easy. Yes, compared to the doltish vehicles of decades past, completely dependent, as they were, for all navigation on the people at their wheels—be they drunk, sober, passive, aggressive, reckless, homicidal, suicidal, erratic, law-fearing, or plain bonkers—the self-driving auto is a marvel to behold. But, to get a human-free car to safely guide itself through the streets and highways of our land, there’s already a baseline to go by: the rules of the road. And our computational wonderthings are very good at following rules.

But there’s another arena of transportation that’s more lawless. Or, at least, the rules are unspoken (and have no weight in a court of law). The sidewalk. Navigating the strips on concrete that line our streets is a much more subtle affair, dependent on body language, unconscious conventions, and social and cultural norms. Google Chauffeur installed on a smaller machine wouldn’t do too well on a pedestrian pathway.

Now a team of researchers at Stanford has taken up the challenge of creating a self-navigating machine for the sidewalk. Their R2D2-sized autonomous automaton is named Jackrabbot, after its hare-like form. To successfully steer itself through the complex world of walkways, Jackrabbot relies on several methodologies. “The traditional CNN deep learning approaches are not sufficiently adequate,” says Silvio Salvarese, a professor of computer science and the director of Stanford’s Computational Vision and Geometry Lab. “What my group is trying to do is integrate more traditional machine learning with neural networks.”

The goal is to have a robot that moves like a pedestrian. To do so it has to understand a lot more than human to human interactions. The sidewalk, after all, hosts skateboarders, bicyclists, hoverboarders, wheelchairs, dog walkers, and squirrels in addition to unadorned people out for a stroll. “You can see that the complexity of interaction is much richer than that between humans,” says Salvarese. “For example, pedestrians and bikes use a lot of conventions and subtle cues, in close proximity, without accidents—well, sometimes accidents, but mostly not.”

To understand this complexity, and get it into the Jackrabbot, the team collected a massive data set of interactions on collegiate walkways. “What we did is fly a drone over the Stanford campus,” says Savarese, “and we recorded hours and hours of footage of all possible actors that populate the campus: pedestrians, bikes, skateboards, strollers. All these agents and trajectories are for learning inter-class relationships.” The data also includes non-agents such as sidewalk, grass, trees, fountains, and staircases.

A side effect of the project is that, in learning how to best inform Jackrabbot on how to navigate among humans, they’ve learned a lot about how humans navigate among humans. Their data could be used by civil engineers and sociologists hoping to better understand the flow of humanity. And their technique needn’t be limited to understanding human interactions. In fact, one colleague at the school has put the team and their practices to use in an attempt to track the relationships of hens in large colonies. And, of course, those autonomous cars could make use of the approach. There’s more to the rules of the road than the rules, after all. At urban intersections with stop signs, self-driving cars will have to understand when walker hesitation is just a safety check, and when it’s a sign that the right of way had been surrendered.

More immediate applications for the Jackrabbot could include assisting shoppers, patrolling the campus as a mobile information booth, and solving the “last mile problem” (that is, unloading deliveries of cargo delivered by self-driving truck).

So far, Jackrabbot has done the majority of its meandering indoors. It’ll spend more time outside on the pathways of the Stanford campus this fall, when then team is sure all safety issues have been worked out. Then finally, perhaps, robots will become the autonomous things they were first imagined to be.

“It’s an exciting time for AI,” says Savarese. “My group is really trying to help make an ecosystem where humans and robots are interacting in successful and imaginative ways.”

Michael Abrams is an independent writer.

Pedestrians and bikes use a lot of conventions and subtle cues, in close proximity, without accidents—well, sometimes accidents, but mostly not.Prof. Silvio Salvarese, Stanford University