5 Lessons from the DARPA Robotics Challenge

5 Lessons from the DARPA Robotics Challenge

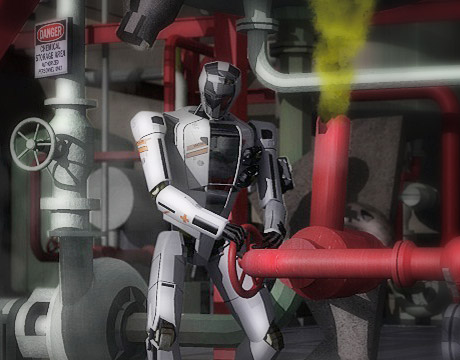

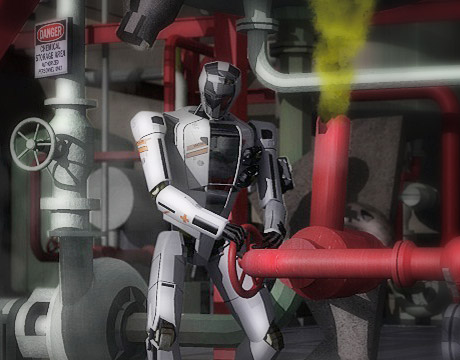

An illustration of an example disaster response scenario. Image: DARPA

The Fukushima disaster in Japan in 2011, which created a toxic environment too dangerous for human responders to enter, inspired the Defense Advanced Research Projects Agency to create the DARPA Robotics Challenge (DRC), with the goal of developing semi-autonomous ground robots for complex tasks in dangerous conditions. According to DARPA, useable technologies that result from the DRC will hopefully lead to the “development of robots featuring task-level autonomy that can operate in the hazardous, degraded conditions common in disaster zones.”

The 2015 DRC finals, held in Pomona, CA, involved 24 teams from around the globe. The objective was to maneuver their own highly specialized robots through eight autonomous and semi-autonomous mobility and manipulation tasks to demonstrate their ability to operate in uncontrolled and unpredictable situations. A joint team from Worcester Polytechnic Institute and Carnegie Mellon University (WPI-CMU) made up of faculty, engineers, and students, placed seventh. Its six-foot-tall humanoid Atlas robot named WARNER was made by Boston Dynamics.

WARNER successfully completed seven of eight tasks on each day of the competition, which included driving a vehicle, opening a door, using power tools, and turning a valve. The team scored 14 out of 16 possible points over the two-day event. It suffered a program design error and an arm hardware failure that caused two attempts at the drill/cutting task to fail. However, WPI-CMU was the only team that attempted all tasks, did not require physical human intervention (a reset), and did not fall during any of the missions.

What They Learned

The Institute of Electrical and Electronics Engineers published a paper written by WPI-CMU team members called “No Falls, No Resets: Reliable Humanoid Behavior in the DARPA Robotics Challenge.” They describe their approach to the DRC and their strategy for avoiding failures that required physical human intervention, and lessons learned. Five major takeaways include:

- Walk with your entire body. All teams in the challenge failed to use aspects of the physical space to help their robots move, such as stair railings, or putting a hand on the wall to help cross rough terrain. “Even drunk people are smart enough to use nearby supports,” they write. “Why didn’t our robots do this? We avoided contacts and the resultant structural changes. More contacts make tasks mechanically easier, but algorithmically more complicated.”

- Design robots to survive failure and recover. The authors advise that robustness to falls (avoiding damage) and fall recovery (getting back up) need to be designed in from the start, not retro-fitted to a completed humanoid design. The Atlas robot was too top heavy and its arms too weak to reliably get up from a fall. The team has since been exploring inflatable robot designs as one approach to fall-tolerant robots.

- The most cost-effective research area to improve robot performance is human-robot interaction.Developing ways to avoid and survive operator errors is crucial for real-world robotics. Interfaces must be designed to help eliminate errors, reduce the effect of errors, and speed the recovery or implement “undo” operations when errors happen. “Interfaces need to be idiot-proof and require no typing, have no check boxes, and minimize the number of options for the operator,” writes the team.

- Be ready for the worst case. In the second day of competition, the team lost the DARPA-provided communications between the operators and the robot in a full communication zone for at least six minutes, which counted against the team’s time. “Our lesson here,” they state, “is that for real robustness, we should have planned for conditions worse than expected. We could have easily programmed behaviors to be initiated autonomously if communications unexpectedly failed.”

- The field of humanoid robots is flawed.In situations where the researchers did not control the test, most humanoid robots, even older and well-tested designs, performed poorly and often fell. “It is clear that our field needs to put much more emphasis on building reliable systems, relative to simulations and theoretical results,” writes the team. “We need to understand why our current hardware and software approaches are so unreliable.”

High-Value Experience

According to Mike Gennert, director of WPI’s Robotics Program, the greatest experience for the team was learning how hard it is to design and operate humanoid robots. WPI team members had no previous experience with humanoid robots and, although most of the techniques were not quite cutting edge, they were advanced. “That meant extensive testing to ensure that the overall system would perform,” says Gennert. “We also learned a lot about the challenging problems that remain, such as balance and stability, robust and adaptive control, integrating perception and manipulation, and the need for better sensors, especially haptics.”

The team is still working with its Atlas robot.About 30 students are involved in 12 different projects, including learning for walking, footstep placement, two-handed coordination, and getting up from falls.

“This project gave us experience with very advanced robotic hardware and software,” adds Gennert. “We continue to use them in our teaching and research.Our students who have graduated have gone on to great jobs and graduate schools, in no small part due to what they learned in the competition. I could not be more proud of this team and what it has accomplished.”

Mark Crawford is an independent writer.

Our students who have graduated have gone on to great jobs and graduate schools, in no small part due to what they learned in the competition.Mike Gennert, WPI’s Robotics Program