Robotic Dance Instructors Hit the Floor

Robotic Dance Instructors Hit the Floor

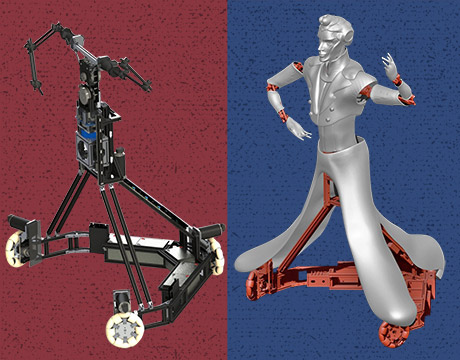

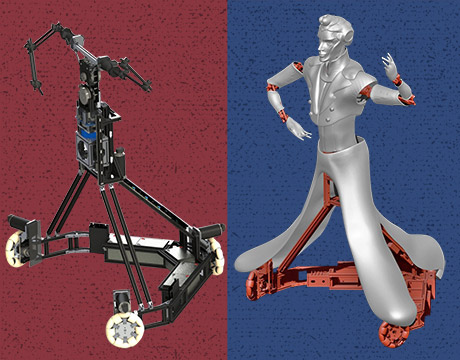

A CAD rendering of the robot dance instructor. Image: System Robotics Laboratory, Tohoku University

Two partners dance and sway to the rhythm, one leading, the other learning and following. This sounds like a typical night on “Dancing with the Stars,” but what if one of the dancers happened to be a robot? Not only is this becoming a reality, but you might actually find that the non-human in this scenario is a better and more encouraging teacher than many humans.

Diego Felipe Paez Granados began as part of a team at Tohoku University in Sendai, Japan, wanting to turn a robot into, if not a winning contestant on DWS, then at the very least a machine prepared to give adequate dance lessons to an amateur. Now a postdoc researcher at the university, Granados (and his robot) has made great strides.

“Before I was involved, they developed an initial model that was a female dancer that tried to predict the steps of the partner,” Granados says of researchers at the university. “While I was on board it was about the opposite: ‘Can the robot teach you and guide you?’”

The mobile robot uses a tripod on top of a base with three multidimensional wheels attached. There are four degrees of freedom that are designed to emulate a male dancer. This is something of a simplification of the human body, but the result is an adequate emulation of a person’s motion while dancing. “The whole idea of the project is to have real-time control so we can know what the actuators’ output is,” he says. “We have sensors and laser-ranged finders to obtain the fifth position.”

More For You: Read about more breakthroughs in robotics from ASME.org

Three actuators in the robot’s lower body of controls the mobile base, a linear one in the middle part controls the rise and fall of the dance, and three in the upper body reproduce the torso, arm, and shoulder motions. The shoulder is controlled through a four-bar linkage.

Admittedly not much of a dancer himself, Granados went to a nearby dance academy to understand dancing and the human interaction involved before building his robot. “You gain a new appreciation for [dancing],” he says.

So how does the interaction between human and robot begin?

“Initially the person goes to the robot with a high position of the arms and then the robot, with a screen for the face, explains the steps and motions that person should do and the names of the steps it’s going to teach,” he says. “The person tries freely without the robot, following the screen, then the person tries directly [in contact] with the robot. The robot initially uses speech to tell the person what they should do and, after several trials, the robot stops using speech. The robot then guides them through the dance and it has a scoring system to show how you’re doing at your dancing.”

Sessions with the robot are typically 30 minutes to an hour.

The biggest challenge in the project was not only understanding how a person moves while dancing, but also how to have the robot communicate what the person might be doing wrong and help correct it.

“We’d like to eventually have a condition avoidance system and navigation through a room. I’m also working on putting a better adaptive system into the robot,” he says. “Right now, it takes five or six sessions to adapt to a person, but I want it faster.”

The robot so farhas been limited to teaching the waltz. While Granados saysit doesn’t yet feel like a human to him, the robot’s been particularly good for assessing how advanced a person is with their dancing. He hopes this work can expand beyond dancing and branch out into other fields such as bioengineering where it could assist with the movement of exoskeletons.

“It’s fun to hear about people’s reactions,” he says. “Dancing is meaningful to so many, and this is definitely a different way to learn.”

Eric Butterman is an independent writer.

I’m also working on putting a better adaptive system into the robot. Right now, it takes five or six sessions to adapt to a person but I want it faster.Prof. Diego Granados, Tohoku University