Sensors Allow Robots to Feel Sensation

Sensors Allow Robots to Feel Sensation

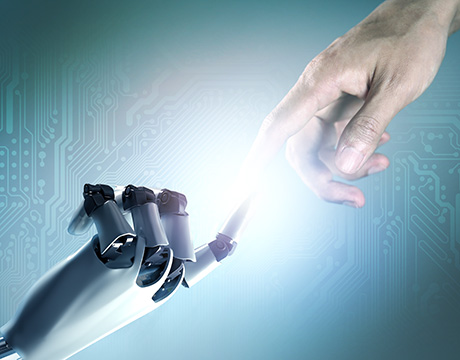

The line between human and machine is getting thinner every day. We have robots that can reason, predict, and even work in partnership with humans and other robots. But in their interactions with the physical world, these machines have always been limited.

That is changing.

A group of engineers from Stanford University’s Zhenan Bao Research Group, in partnership with Seoul National University’s College of Engineering, has developed an artificial nerve that, when used with a robotic “brain,” allows robots to feel and react to external stimulus just like we do. Soon, this could become a key part of a multisensory artificial nervous system that empowers the next generation of thinking, feeling robots. The technology could also be used in prosthetic limbs to allow patients to “feel” and interact with their replacement body parts just as they would natural limbs.

It’s a complex technology, but the concept is simple. In our skin, we have sensors that can detect even the lightest touch, neurons that transmit that touch to other parts of the body, and synapses that take that information and translate it into the feelings that we recognize and respond to.

A touch on the knee first causes the muscles in that area to stretch, sending impulses up the associated neurons to the synapses, which recognize the response and sends signals to the knee muscles to contract reflexively and to the brain to recognize the sensation. We call it an involuntary reaction, but it’s anything but automatic.

For You: Making the Emotional Robot

“The artificial mechanosensory nerves are composed of three essential components: mechanoreceptors (resistive pressure sensors), neurons (organic ring oscillators), and synapses (organic electrochemical transistors),” says Tae-Woo Lee, an associate professor in the Department of Materials Science and Engineering, Hybrid Materials at Seoul National University who worked on the project. “The pressure information from artificial mechanoreceptors can be converted to action potentials through artificial neurons. Multiple action potentials can be integrated into an artificial synapse to actuate biological muscles and recognize braille characters.”

The Bao Lab’s artificial system mimics human functionality by linking dozens of different pressure sensors together, creating a voltage boost between their electrodes whenever a touch is detected. This change is recognized by a ring oscillator, which converts the voltage change into a series of electrical pulses that are picked up by a third component, the synaptic transistor. The transistor translates those transmission pulses into patterns that match the patterns that organic neurons transmit in the brain.

The artificial synaptic transistor is the real development in Bao’s work. It allows the artificial system to interact with natural, human systems as well as robotic brains.

Wide-Ranging Applications

The research, led by Zhenan Bao, a professor of chemical engineering at Stanford, was first reported in Science, and featured a video that demonstrated the system’s capabilities. In the video, the Bao Lab used the technology to sense the motion of a small rod over pressure sensors. It also shows how the technology could be used to identify Braille characters by touch. Most impressively, the researchers inserted an electrode from their artificial neuron to a neuron in the body of a cockroach, using the signal to cause the insect’s leg to contract.

This proved that the artificial nerve circuit could be embedded as part of a biological system, enabling prosthetic devices that offer better neuro integration than is currently available.

“Previous prosthetics usually use a pneumatic actuation of artificial muscle, which are bulky and not so dexterous,” Prof. Lee says. “Our artificial nerve can be embedded in the prosthetics aesthetically without bulky pneumatic components. We believe that our artificial nerve can operate the artificial muscle in the prosthetics more delicately and aesthetically. Most prosthetics do not have a sensing function for touch, and the conventional prosthetics require a complicated software algorithm to make the artificial muscle move. But our mechanosensory nerve can detect touch and then the output signal can be directly transmitted to actuate the muscle.”

There is also potential for this technology in the robotics space, explains Dr. Yeongin Kim, formerly a graduate student in the Bao Lab who worked on the artificial nerves project. In particular, it could lead to the creation of so-called soft robotics, in which robots are constructed from materials that look and feel more similar to organics. The process of mimicking the synapses and neurons of the biological nervous system in the realm of robotics could go a long way toward the development of machine learning and robots that can teach themselves new skills.

“The advantage of machine learning is you don't need to teach a robot every detail,” Kim says. “You can just make it learn a difficult job, and the robot can train on that difficult task by itself. “

In these cases, hardware like the artificial nervous system can be useful because of the role that synapses play in learning and sensation.

“[In our bodies], the network of neurons and synapses can process information from the environment and control the activators that impact what we feel and how we respond,” Kim says. “That kind of signal processing can be useful in training what we want a robot to do and not do. This thinking is becoming popular in neuromorphic computing as well as robotic engineering, and we expect our system to provide the hardware architecture for machine learning that can be used in future neurorobots.

Whatever the application, this technology is still in the early stages of development and it remains to be seen what commercial potential it holds. One goal of the work, though, is to further the development of bio-inspired materials with soft mechanical properties that can be used in sophisticated neurorobots or neuroprosthesis, performing in ways that are comparable to or even better than biological systems.

“Bioinspired soft robots and prosthetics can be used for people with neurological disorders and more,” he says. “There are many interesting commercial applications of our technology.”

Tim Sprinkle is an independent writer.

Read More:

Low-Tech Solutions Fight Hunger

Insect-Sized Robot Takes Flight

Robots Make Self-Repairing Cities Possible

The advantage of machine learning is you don't need to teach a robot every detail. You can just make it learn a difficult job, and the robot can train on that difficult task by itself. Dr. Yeongin Kim, Stanford University