Advances in Vision-Guided Robots

Advances in Vision-Guided Robots

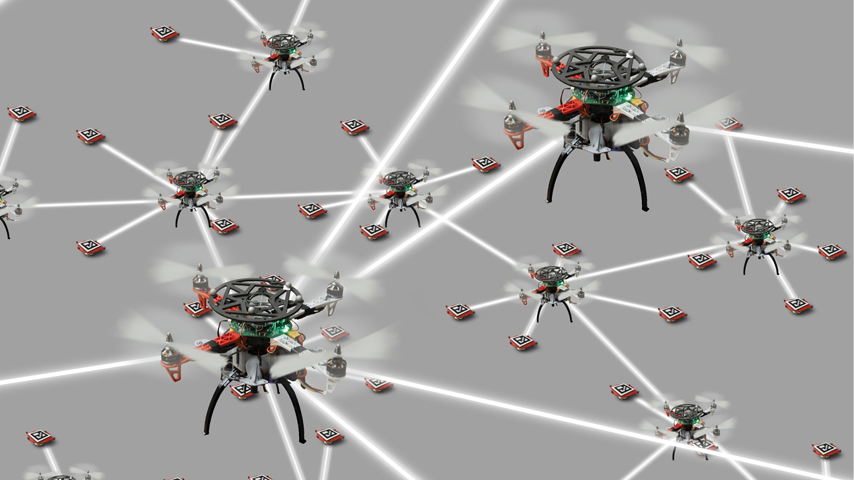

A robot searches for a potential explosive device at a noisy arcade. Image: Sandia National Laboratories

For years, vision-guided robots have been a mainstay for manufacturing and assembly tasks such as inspecting and sorting parts. These operations tend to be carried out in highly constrained, structured environments with zero obstacles. However, advances in processing power and sensor technologies are now enabling robots to undertake more unstructured tasks such as autonomous flying, driving, and mobile service activities, which require better vision systems to identify and avoid obstacles.

“For example, vision-based systems are now used for detecting and tracking people and vehicles for driving safety and self-driving systems,” says Martial Hebert, director of Carnegie Mellon University’s Robotics Institute. “Progress is being made in the fundamental approaches, as well as supporting technologies such as computing and high-quality sensing. Personal robotics are developing increasing interaction capabilities. The overall trend is toward closer collaboration between robots and humans.”

Cutting-Edge Research

Sandia National Laboratories (SNL) is conducting extensive research in the field of telerobotics, where a typical application would be a human robot operator who relies on the robot’s onboard cameras to convey a sense of presence at the remote location. “However, cameras on pan-tilt units are in many ways a poor replacement for human vision,” says Robert J. Anderson, principle member of the Robotic and Security Systems Department at SNL. “Human eyesight has better resolution, a very large peripheral field of view, and the ability to make quick glances to fill in a mental model of a space.When operators work with standard cameras, they tend to get tunnel vision, and rapidly forget the locations of objects just out of the field of view.”

To improve this situation, SNL researchers have combined live vision with constructed 3D models of a world to enhance a remote operator’s sense of space.By using gaming technology, such as the Kinect sensor from Microsoft, they can scan and create a model of a remote site.Rather than a single 2D display camera view, the operator can view the remote robot from any direction, much like first-person shooter video game, providing either an over-the-shoulder view of an avatar, a tracking view, or a world view.“This drastically reduces the problem of tunnel vision in operating remote robots,” says Anderson.

Although GPS has become cheap enough and reliable enough to enable navigation in a collision-free space, there is always the potential for collision duringmobile, ground-based operations. However, a new generation of inexpensive 3D vision systems will make it possible for robots to navigate autonomously in cluttered environments, and to dynamically interact with the human world.

This was recently demonstrated by the Singapore-MIT Alliance for Research and Technology (SMART), which constructed a self-driving golf cart that successfully navigated around people and other obstacles during a test run at a large public garden in Singapore. The sensor network was built from off-the-shelf components.

“If you have a simple suite of strategically placed sensors and augment that with reliable algorithms, you will get robust results that require less computation and have less of a chance to get confused by ‘fusing sensors,’ or situations where one sensor says one thing and another sensor says something different,” says Daniela Rus, professor of electrical engineering and computer science at MIT and team leader.

Manufacturing Transformation

No industry has been more transformed by vision-guided robots than manufacturing. The earliest robots were designed for simple pick-and-place operation. Now, with technologic advances in sensors, computing power, and imaging hardware, vision-guided robots are much more functional and greatly improve product quality, throughput, and operational efficiency.

According to an article by Axium Solutions, a manufacturer of vision-guided robotic solutions for material handling and assembly, on roboticstomorrow.com, “Enhanced computing power helps developers create more robust and complex algorithms. Improvements in pattern matching and support for 3D data enabled new applications like random bin picking, 3D pose determination, and 3D inspection and quality control. This probably explains, at least partially, why new records for machine vision systems and components in North America were established in the last two years.”

Hardware improvements for vision-guided robotics include better time-of-flight sensors, sheet-of-light triangulation scanners, and structured light and stereo 3D cameras. “Recent advances in sheet-of-light triangulation scanners open many new possibilities for inline inspection and control applications requiring high 3D data density and high speed,” states Axium. “The latest CMOS sensors can reach a scanning speed up to several thousands of high resolutions 3D profiles per second.”

The main advantage of structured light and stereo 3D cameras over sheet-of-light triangulation is that it relative motion between the sensor and the part is not required. This allows fast generation of 3D point clouds with sufficient accuracy for good scene understanding and robot control.

“These and other recent developments in algorithms and sensor technologies make it possible to efficiently implement vision-guided robotics tasks for manufacturers,” Axium concludes. “Therefore, we are optimistic that more and more projects will integrate machine vision and robots in the following years.”

Mark Crawford is an independent writer. Learn more about the future of manufacturing innovation at ASME's MEED event.

Progress is being made in the fundamental approaches, as well as supporting technologies such as computing and high-quality sensing…The overall trend is toward closer collaboration between robots and humans.Prof. Martial Hebert, Carnegie Mellon University