Racism Runs Deep, Even Against Robots

Racism Runs Deep, Even Against Robots

We’ve all heard the stories. Whether it’s taxi drivers refusing to pick up passengers in certain neighborhoods, store owners casting suspicion on particular customers, or landlords refusing to prospective renter’s based on their names, racial bias is an entrenched part of the human experience. It’s unfortunate, but it has almost always been a fact of life.

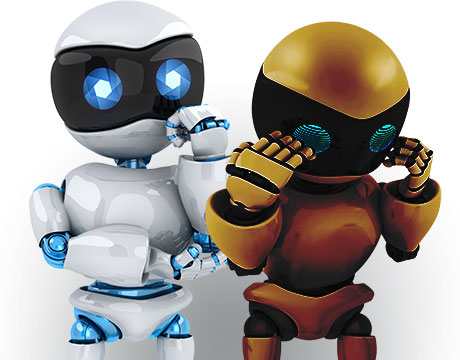

It now looks like the human tendency to identify and stereotype along racial lines is expanding into the world of robotics.

That’s according to the findings of a recent study out of the Human Interface Technology (HIT) Lab NZ, a multidisciplinary research center at the University of Canterbury in Christchurch, New Zealand. Led by Dr. Christoph Bartneck, the study, Robots and Racism, dug into the role that the color of a robot’s “skin” – that is, the color of the material they are made out of – plays in how humans interact with and respond to them.

The findings were... probably a little less than surprising.

“We really didn't know whether people would ascribe a race to a robot and if this would impact their behavior towards the robots,” Dr. Bartneck said.“We were certainly surprised how clearly people associated a race to robots when asked directly. Barely anybody would admit to be a racist when asked directly, while many studies using implicit measure showed that even people that do not consider themselves to be racist exhibit racial biases.”

The study used the shooter bias paradigm and several online questionnaires to determine the level that people automatically identify robots as being of one race or the other. By adapting the classic “shooter bias” test to robots, which studied implicit racial bias based on the speed with which white subjects identified a black person as a potential threat, the team was able to examine the reactions to “racialized” robots, identifying biases that have not been uncovered.

For You: Robots Use Environmental Clues to Build Structures

In shooter bias studies, participants are putinto the role of a police officer who has to quickly decide whether to shoot or not to shoot when confronted with images in which people either hold a gun in their hand or not. The image is shown for only a split second and participants do nothave the option to rationalize their choices. They have to act within less than a second. It’s all about instinct.

As in the human-based versions of this test, reaction-time measurements in the HIT Lab NZ study revealed that participants demonstrated bias toward both people and robots that they associated as black.

We project our prejudices onto robots. We treat robots as if they are social actors, as if they have a race.

Dr. Christoph Bartneck, University of Canterbury

“We project our prejudices onto robots,” Bartneck said. “We treat robots as if they are social actors, as if they have a race. Our results showed that participants had a bias towards black robots probably without ever having interacted with a black robot. Most likely the participants will not have interacted with any robot before. But still they have a bias towards them.”

Part of the problem is that robots are becoming more and more human-like. They exhibit intentional behavior, they respond to our commands, and they can even speak. For most of us, we only know this type of behavior from other humans. When we’re faced with something we rationally know is just a computer on wheels, we socially apply all the same norms and rules as we would toward other humans because it seems so human to us, Bartneck said.

Ironically, even as we are reflecting our worst impulses onto robots, those robots are learning negative biases toward us as well. It’s happening because artificial intelligence and Big Data algorithms are simply amplifying the inequalities of the world around them. When you feed biased data into a machine, you can receive a biased software response.

Last year, for example, the Human Rights Data Analysis Group in San Francisco studied algorithms that are used by police departments to predict areas where future crime is likely to break out. By using past crime reports to teach the algorithm, these departments were inadvertently reinforcing existing biases, leading to results that showed that crime would be more likely to occur in minority neighborhoods where police were already focusing their efforts.

To Dr. Aimee van Wynsberghe, an assistant professor of ethics and technology at Delft University of Technology in the Netherlands and president of the Foundation for Responsible Robotics, these findings reinforce long-held concerns about the future of human-robot interaction and should raise questions for robotic engineers as they create the next generation of humanoid machines.

“Researchers have shown that American soldiers become so attached to the robots they work with they do not want a replacement; they want the robot they know,” she said. “Other studies show that people put their trust into robots when they absolutely should not, that they are aroused at touching robots in 'private' places, that they are loathe to destroy them, etc. People project characteristics onto robots that simply are not there. This tendency is referred to as anthropomorphization. In the case of the study we’re discussing here people are simply projecting some human characteristics onto robots and seeing them as a particular race.”

The answer isn’t more “diverse” robots, she argued, but rather robotics manufacturers and designers need to better understand their customers. They should actively be trying to prevent such biases from creeping into the robot space by avoiding designs and functions that lend themselves to easy anthropomorphization.

“We aren't biased towards black or white dogs because we don't see them as human,” she said.

Along those same lines, it’s important that robots are viewed as robots, not as pseudo-humans. Intentionally giving robots features that deceive us subconsciously into associating them with a particular gender or race is a slippery slope, and we’re already seeing what problems robot bias could create in the real world, thanks to Bartneck’s study.

After all, attempting to design a robot for a particular race could, in some cases, be seen as racist.

“We believe our findings make a case for morediversity in the design of social robots so that the impact of this promisingtechnology is not blighted by a racial bias,” Bartneck said. “The development of an Arabiclooking robot as well as the significant traditionof designing Asian robots inJapan are encouraging steps in this direction, especially since these robotswere notintentionally designed to increase diversity, but they were the resultof a natural design process.”

Tim Sprinkle is an independent writer.

Listen to ASME TechCast: How Engineers Close the Communication Gap with New Colleagues