E-Skin Shows Success in Detecting Chemicals, Viruses

E-Skin Shows Success in Detecting Chemicals, Viruses

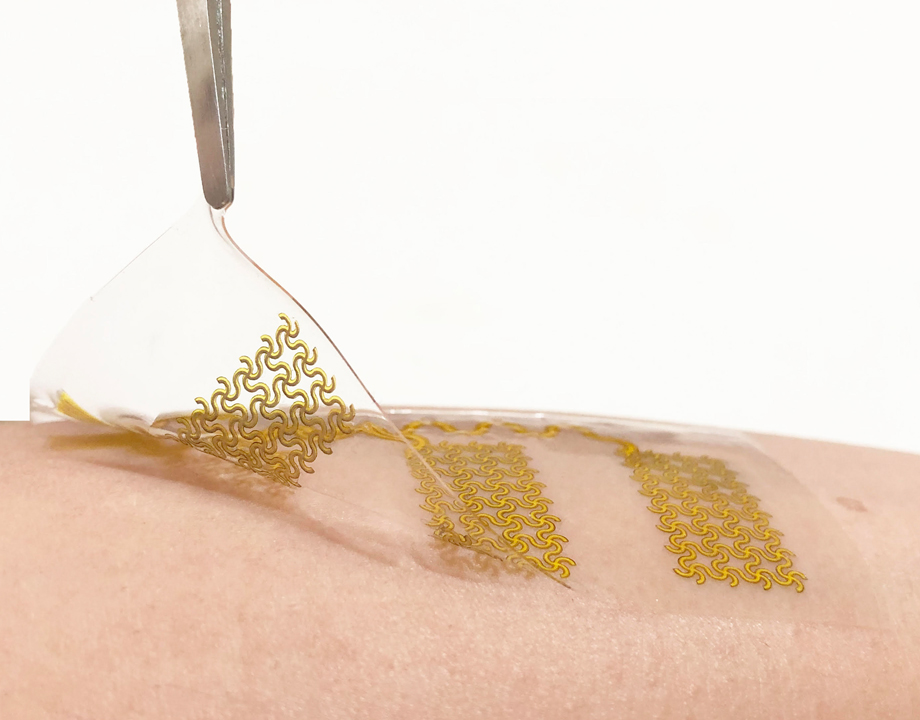

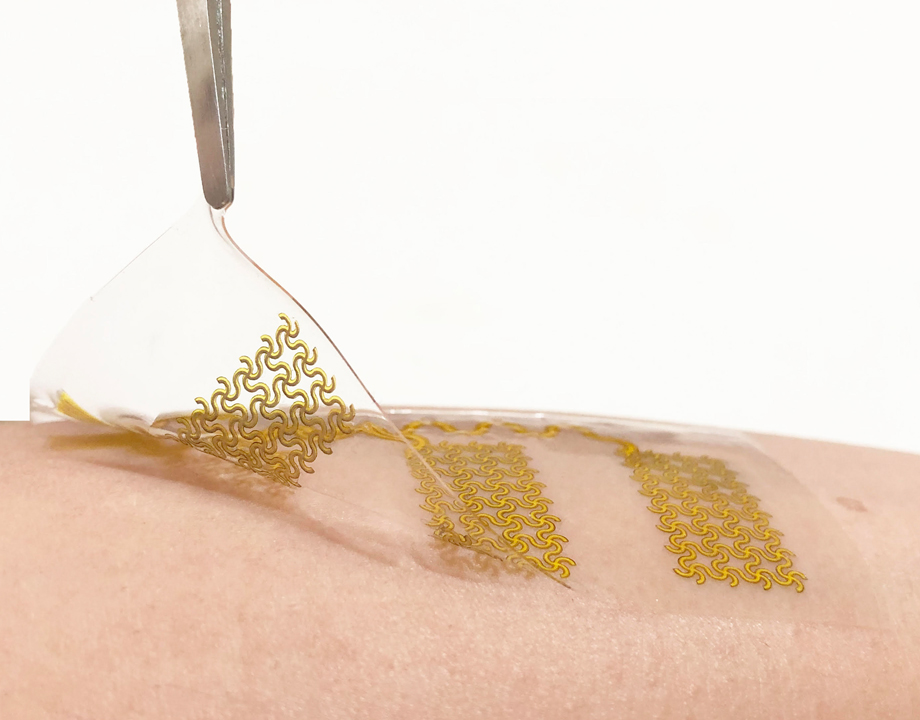

Soft electronic skin is human interface for robotic sensing. Photo: Wei Gao/California Institute of Technology

To create a world where robots and humans coexist, robots should be agile, adaptive, and most of all, responsive. It will be critical for robots to sense and feel just as a human would so that they are predictable and reliable. A team of researchers at the California Institute of Technology has developed an “e-skin” that could bring the goal one step closer to this reality.

Comprised of many tiny sensors embedded into a hydrogel using additive manufacturing, the e-skin is then over laid onto a robotic arm where sensors are fixed to the finger tips and palm. The same sensory array also is embedded into a bandage-like patch for a human controller, who can then feel when the robotic arm sends a signal about an object’s temperature, size, and more. By communicating via an interface, the robotic arm and the human act in tandem.

“We want to make sure a human can control the robot, so that the human can ‘touch’ an object,” said Wei Gao, assistant professor of medical engineering whose lab is developing the e-skin. “So, we used the same printing approach to print another e-skin that can interface with a human’s skin to get a signal from their body and tell the robot what to do.”

What makes this interactive system unique is that in addition to temperature and pressure, the e-skin can detect a range of both dry and liquid-phase substances including explosives, nerve agents, pesticides, and even viruses like COVID-19. This makes it ideal for use in harsh environments.

“There is a gap right now in the field for chemical molecule sensing,” Gao explained. “We wanted to give the robot more powerful functionality for real-world applications, so we’re working to see if we can perform multimodal physical and chemical sensing together in the same electronic skin platform.”

Together, the robot and human interface are called the M-Bot. Gao said that ideally, M-Bot should perform an in situ threat compound detection, alerting the human controller that there is a threat with a haptic signal. He said 3D printing has been instrumental in achieving this level of integration.

More for You: When to Teach a Robot

“We’re learning that we can fully print all the physical and chemical sensors and connection wires into a single patch. It’s very versatile,” Geo said.

In addition to being versatile, it’s low-cost, which means the system is scalable for almost any application one could imagine, including uses in agriculture, food processing, public health, security surveillance, and toxic environments like chemical plants.

One major project challenge for Gao and his team was finding the right material. They had to customize a special nanomaterial ink that meets the viscosity, density, and surface tension requirements for inkjet printing while maintaining the analytical functionality of the sensors.

“Not much of this work has been done in the past because it is challenging, especially, to test for dry-phase chemicals,” he said.

Take Our Quiz: Historical Milestones for Industrial Robots

In a demonstration, the M-Bot successfully detected traces of a standard chemical explosive (TNT) and an OP nerve agent (paraoxon-methyl) within three to four minutes. In addition, the M-Bot successfully identified a protein from the COVID-19 virus. Once detected, AI and smart algorithms were applied to instantly alert the human controller of the presence of such chemicals.

The platform also was adapted to an autonomous boat, called the M-Boat, to detect chemical leakages. In an experiment, M-Boat used a specialized algorithm to track chemical traces to its source, automatically adjusting course based on the chemical’s concentration in the water.

This is the first soft robotic system able to detect dry-phase chemicals. Now, after having experienced proven success, the team wants to further develop the technology to work with other interfaces including autonomous ones that don’t require a human controller. But, Gao said, that will require a partnership.

“In the meantime, we want to focus on making the e-skin more robust,” he said. “We’ve had a successful mini trial, but we know that sensing and decision-making will be way more complicated in the real world.”

As it stands now, the e-skin represents an attractive approach to developing powerful physiochemical sensing systems that may one day pave the way not only for future intelligent soft robots, but also autonomous vehicles, and even wearable technologies.

Cassandra Martindell is a science and technology writer in Columbus, Ohio.

Comprised of many tiny sensors embedded into a hydrogel using additive manufacturing, the e-skin is then over laid onto a robotic arm where sensors are fixed to the finger tips and palm. The same sensory array also is embedded into a bandage-like patch for a human controller, who can then feel when the robotic arm sends a signal about an object’s temperature, size, and more. By communicating via an interface, the robotic arm and the human act in tandem.

“We want to make sure a human can control the robot, so that the human can ‘touch’ an object,” said Wei Gao, assistant professor of medical engineering whose lab is developing the e-skin. “So, we used the same printing approach to print another e-skin that can interface with a human’s skin to get a signal from their body and tell the robot what to do.”

What makes this interactive system unique is that in addition to temperature and pressure, the e-skin can detect a range of both dry and liquid-phase substances including explosives, nerve agents, pesticides, and even viruses like COVID-19. This makes it ideal for use in harsh environments.

“There is a gap right now in the field for chemical molecule sensing,” Gao explained. “We wanted to give the robot more powerful functionality for real-world applications, so we’re working to see if we can perform multimodal physical and chemical sensing together in the same electronic skin platform.”

Together, the robot and human interface are called the M-Bot. Gao said that ideally, M-Bot should perform an in situ threat compound detection, alerting the human controller that there is a threat with a haptic signal. He said 3D printing has been instrumental in achieving this level of integration.

More for You: When to Teach a Robot

“We’re learning that we can fully print all the physical and chemical sensors and connection wires into a single patch. It’s very versatile,” Geo said.

In addition to being versatile, it’s low-cost, which means the system is scalable for almost any application one could imagine, including uses in agriculture, food processing, public health, security surveillance, and toxic environments like chemical plants.

One major project challenge for Gao and his team was finding the right material. They had to customize a special nanomaterial ink that meets the viscosity, density, and surface tension requirements for inkjet printing while maintaining the analytical functionality of the sensors.

“Not much of this work has been done in the past because it is challenging, especially, to test for dry-phase chemicals,” he said.

Take Our Quiz: Historical Milestones for Industrial Robots

In a demonstration, the M-Bot successfully detected traces of a standard chemical explosive (TNT) and an OP nerve agent (paraoxon-methyl) within three to four minutes. In addition, the M-Bot successfully identified a protein from the COVID-19 virus. Once detected, AI and smart algorithms were applied to instantly alert the human controller of the presence of such chemicals.

The platform also was adapted to an autonomous boat, called the M-Boat, to detect chemical leakages. In an experiment, M-Boat used a specialized algorithm to track chemical traces to its source, automatically adjusting course based on the chemical’s concentration in the water.

This is the first soft robotic system able to detect dry-phase chemicals. Now, after having experienced proven success, the team wants to further develop the technology to work with other interfaces including autonomous ones that don’t require a human controller. But, Gao said, that will require a partnership.

“In the meantime, we want to focus on making the e-skin more robust,” he said. “We’ve had a successful mini trial, but we know that sensing and decision-making will be way more complicated in the real world.”

As it stands now, the e-skin represents an attractive approach to developing powerful physiochemical sensing systems that may one day pave the way not only for future intelligent soft robots, but also autonomous vehicles, and even wearable technologies.

Cassandra Martindell is a science and technology writer in Columbus, Ohio.